In 1920, Hollywood screenwriter Anita Loos described a new machine that generated plots for screenplays, or scenarios, as she called them. The machine’s discs whirred and spun, eventually landing on a random selection of words. “Mad Musician Baffles Yearning Heiress,” for example. Loos understandably found the whole thing absurd. “It’s not as easy as that,” she wrote. “There are no handy plot machines in the scenario writing business.”

People have been trying to invent screenwriting machines for as long as they've been making films. But no one's ever come close to succeeding. Until now.

Artificial intelligence (AI) capabilities have advanced dramatically in recent years, resulting in language programs that can write like humans. These programs, known as large language models (LLMs), used to complete words or phrases. Now they can write up to 1,500 words in virtually any format.

Some are beginning to question whether this technology will replace human writers completely. Right now, that might sound crazy. But new models are being released regularly, each with dramatic increases in power, so it’s possible they’ll overtake our wildest imaginations shortly.

Professional writers everywhere should take note, but particularly screenwriters in film and TV. Entertainment companies, already practiced in screenplay analysis, are developing LLMs specializing in screenwriting. These programs can be fine-tuned with an existing writer’s work to emulate their voice. Developers have already put this to the test, successfully producing passable scenes for well-loved shows.

How much do filmmakers know about the technology that could upend screenwriting as we know it? Of those I’ve asked — not enough.

Help me spread the word.

What are Large Language Models (LLMs)?

LLMs use natural language processing, a type of machine learning. Machine learning refers to algorithms training on big datasets so they can make predictions about new data. For natural language processing, that training data is human language content. Pulling from the web and digitized books, LLMs train to predict the next word, sentence, or paragraph.

Here’s how it works: an LLM is given a prompt and runs an analysis on it. Within seconds, it produces whatever content it predicts will likely come next. For example, if the prompt is “Soylent Green Recipe,” it will spit out ingredients and instructions (“dash of people,” etc.). If the prompt is a few lines of a screenplay, it will continue the scene.

The size of an LLM dataset allows for this flexibility. In training, LLMs digest ginormous amounts of data — on a scale no human could match in their lifetimes — all in a matter of days. But that doesn’t mean these models are smarter than people. Left to ramble they make ridiculous, even dangerous, mistakes. With the right prompting, however, they can write rather effectively.

To say GPT-3 is most notable among these would be an understatement. Released by San Francisco research firm Open AI in May 2020, GPT-3 trained on a dataset so huge it’s difficult to imagine. With 175 billion parameters, the entire contents of Wikipedia made up just 3%. Then recently, in May 2021, Google announced the development of their own LLM, called LaMDA, which they say will rival GPT-3.

Amazing, Not “Intelligent”

Upon each release, new LLMs become internet sensations. Countless developers and creatives still Tweet daily about experiments with GPT-3. But some of the more impressive works were eventually called into question. Many were results of heavy cherrypicking, while others were just complete fakes.

A lot of fakes are easy to spot. Their creators fail to grasp an important distinction: language models like GPT-3 are a form of AI, but they are not AGI (artificial general intelligence). AGI would be something more like Hal in 2001: A Space Odyssey. But LLMs don’t think like we do, and they don’t understand the meaning of the words they select. They see language in terms of probability, and spit out words based on those numbers.

Screenwriting: What can they do?

So what are LLMs capable of right now? Well, they can write just about anything: news articles, essays, poetry, instruction manuals, fan fiction and more. But we'll focus on screenwriting. Here are some examples of what LLMs have achieved so far.

Write Short Scenes

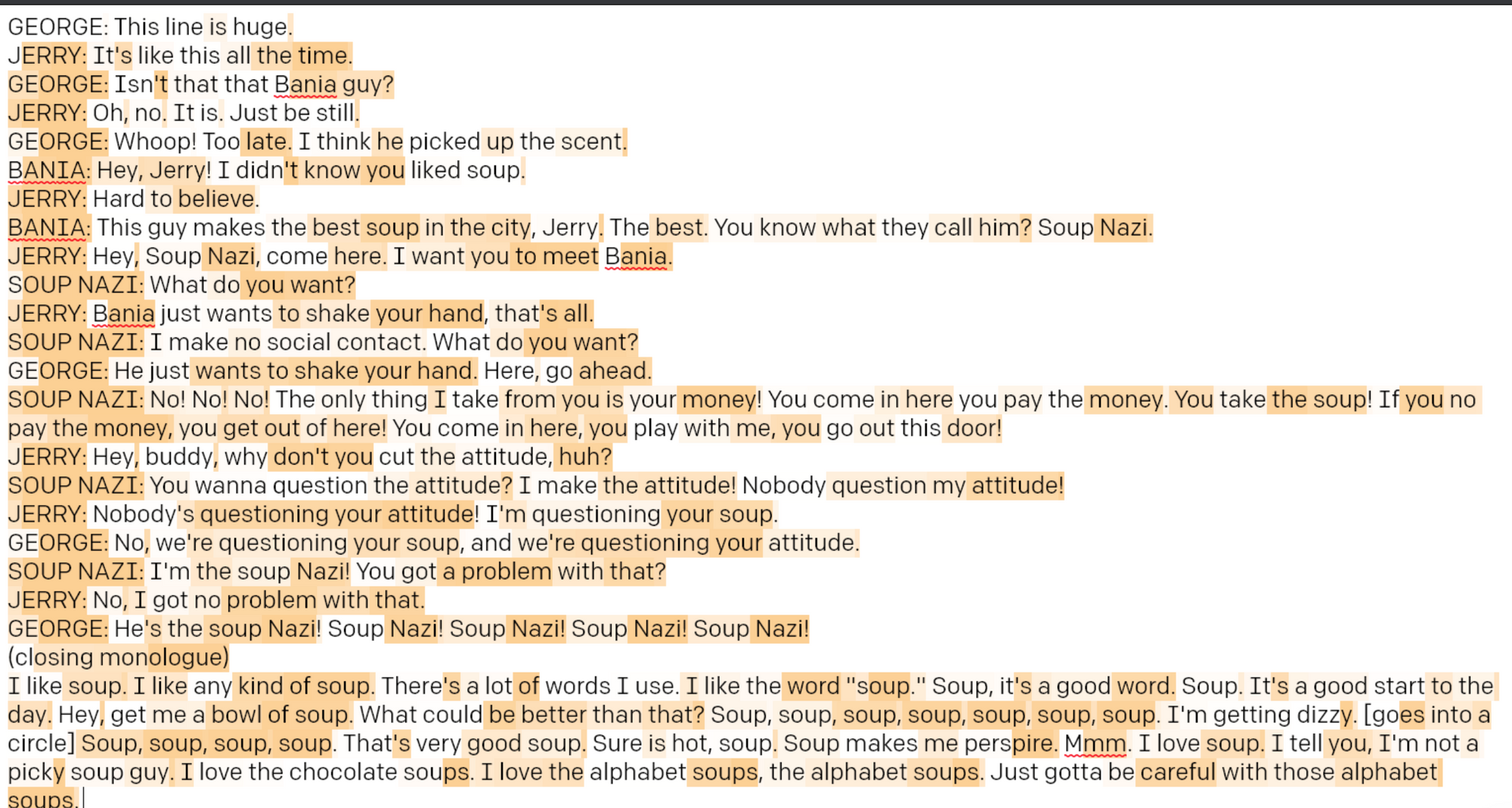

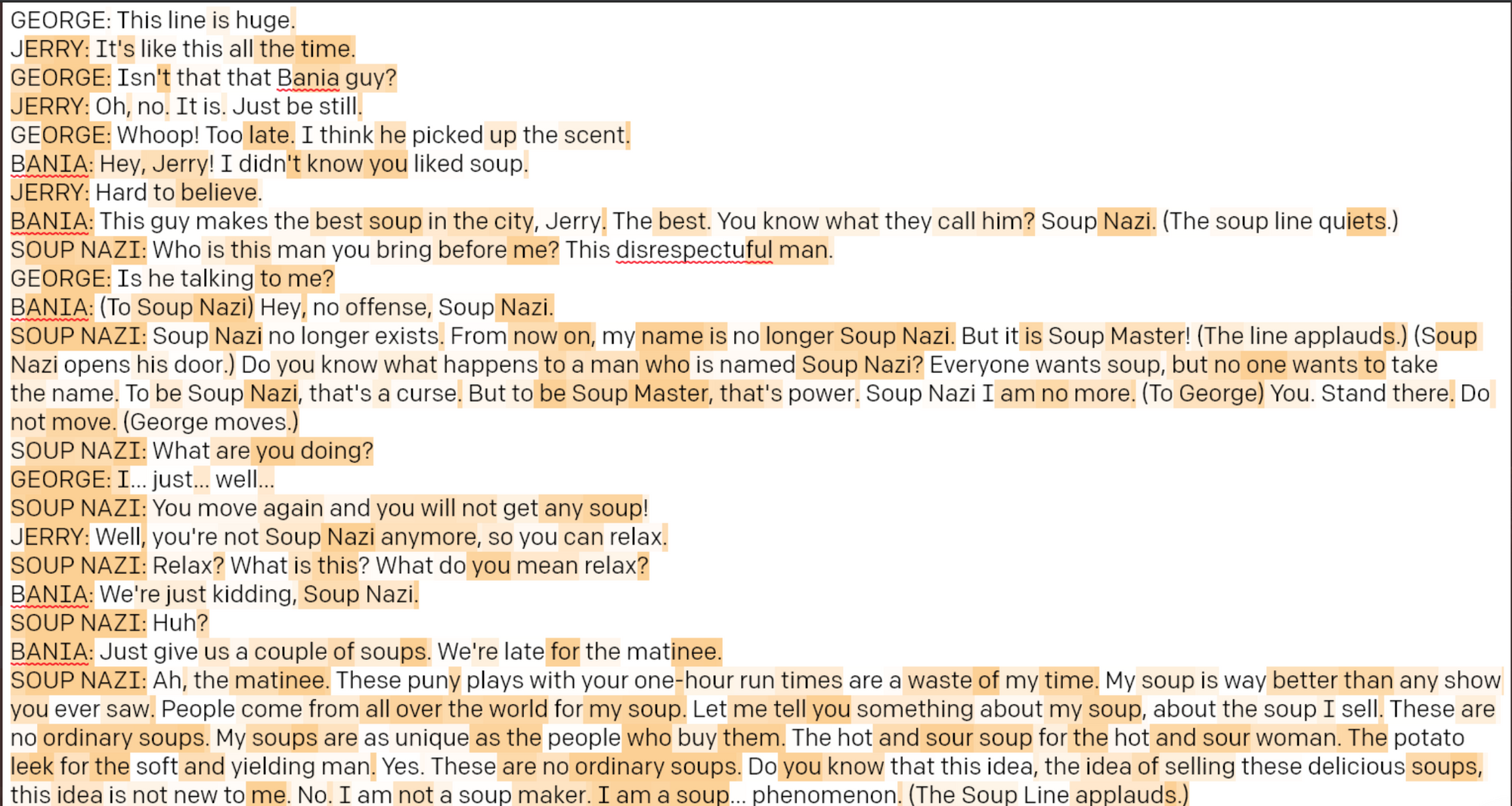

With the right prompting, GPT-3 can compose scenes for a screenplay. The best examples I’ve seen are Ryan North’s GPT-3 generated Seinfeld scenes. The results are startling. Not because the scenes are genius, they just seem so plausibly Seinfeld. Perhaps it's because Seinfeld is often absurd, and LLMs are masters of absurdity. Here's one of the scenes North produced – a variation on the famous "Soup Nazi" scene.

In order to produce quality scenes, GPT-3 must have a quality prompt. For the Seinfeld scenes, North provided several lines of the actual scene. He used the same prompt several times, producing several different versions of each scene. Here's another take on "Soup Nazi."

Incredibly, these scenes were generated with a prompt of just seven lines from the episode's actual script – the dialogue leading up to the first mention of "Soup Nazi."

Dreamlike Lynchian Screenplays

Longer screenplays generated by LLMs are like David Lynch fever dreams. The plot is tricky to map, and the mood grows progressively darker. Dialogue is nonsensical, or desperate and repetitive, as if the characters are glitching.

The first AI generated screenplay I know of is from 2016. It was written by a home-cooked AI the creators called “Benjamin.” The result was this bizarre short film starring Thomas Middleditch of Silicon Valley. It’s weird and makes no sense, but the absurdity is kind of entertaining.

More recently, a collective of students calling themselves Calamity AI has produced a series of short films using LLM generated screenplays. Though the films aren't always coherent, they’ve gotten plenty of press for their AI “collaborations.” Pretty clever.

Emulate a Specific Voice

The scale of GPT-3's training data makes it knowledgeable about well known phenomena: shows, stories, events, celebrities, etc. As the Seinfeld examples demonstrate, this can even enable the model to adopt a particular tone, style or voice. For example, a GPT-3 powered app called AI|Writer allows users to correspond with historical figures via email. The AI|Writer website says it's "capable of responding in the voice of virtually any historical, public or popular fictional character." To illustrate, creator Andrew Mayne asked Alfred Hitchcock to give his thoughts on how Interstellar compares to 2001: A Space Odyssey.

With varying levels of success, LLMs have written in the style of the New Yorker, the Guardian, Harry Potter books, Emily Dickinson, and pretentious literati on Twitter. The list goes on. Like, forever.

Calamity AI made a short film featuring a reading of what they call a “Dr. Seuss” book written by the GPT-3 based app they use, ShortlyAI. The book itself is actually a classic example of LLM strengths and weaknesses. In some ways it's eerily accurate. It opens like a Seuss book, but then fails to rhyme or even keep track of its own story. In one line, the Mayor of a town calls for 30 minutes of silence. In the next, the town is suddenly putting on a play. It’s like Dr. Seuss meets Wanda Vision.

Brainstorm

Some of GPT-3’s flaws actually inform its strengths. Its tendency to produce surprising, wacky ideas make it an excellent tool for brainstorming, as software designer Ben Syverson describes in an IDEO blog post. In this way, LLMs function like a Magic 8-Ball with the ability to produce a near infinite variety of answers.

Toxicity, Bigotry & Some Really Dark Shit

Unfortunately, training on human content makes LLMs function like the psychoactive pink slime beneath New York City in Ghostbusters 2. They absorb the good, the bad, the racism and the horrifying misogyny. LLMs have demonstrated a tendency to replicate misinformation, discrimination, and hate speech. Widely deployed, they could even create health and safety issues. In testing, chatbots built to provide automated medical advice recommended suicide to a simulated mental health patient. LLM creators like Open AI have attempted to patch these and other issues with “toxicity filters.” But unfortunately, the filters don't work very well.

What Can’t They Do?

Plot, Continuity, & Fact Checking, to Name a Few…

LLMs aren’t yet capable of consistently following a storyline, formulating a plot, or even just making sense for more than 1,000 words. Before long, it loses sense of where it’s been and where it’s going. So for now, good LLM writing is only possible with a human at the helm.

LLMs also have no way of ensuring they don’t get the facts completely wrong. Their datasets are mostly web content, which is rife with misinformation. Again, without moderation, there is danger of inflicting real damage.

Comedy

I’m not convinced LLMs can truly do humor, though the absurdity of output is frequently hilarious. An LLM cannot subtly lay the groundwork for a joke over time, then make your mind do summersaults at the punchline. Since the models don’t “think” or “understand” language (or human life) they’re unable to make the brilliant connections crucial to good comedy.

Be Human.

The most obvious thing an AI language model cannot do is something it will never have the ability to do: create works of human art. Seriously, this is worth mentioning. Imagine the most impressive work of art you’ve ever seen. Now imagine it was created by an AI driven mechanism. Even if it’s good — even if it’s fantastic — is it art?

What Could This Mean for Screenwriting?

Don’t let the failings of current LLMs reassure you too much though. Here's what the technology is capable of today: rather than hiring a professional screenwriter, producers could use LLMs to generate story beats. They could feed those beats back into the model to produce scenes, selecting the best of several options. A script coordinator or writer’s PA could patch them together, and edit for continuity. An established screenwriter might be consulted on story or hired for polishing. And just like that, the role of professional screenwriter shrinks as machines do more of the work.

As these programs become more sophisticated and widely available, will anyone insist on paying screenwriters fair wages?

Of course, any discussion of what AI can do is only temporarily accurate, since the technology is constantly changing. New models are being released each year that blow previous models out of the water, with 10x or 100x the power. These changes are what took LLM screenwriting capabilities from “toddler playing Mad Libs” to “David Lynch fever dream,” in just a couple of years. How long until a model reaches the level of full-length “predictable saccharine rom-com,” or “troubled detective murder mystery”?

AI or Human? We May Never Know.

Once LLMs improve, we may not know whether something was written by human or machine. There are currently no laws requiring disclosure. Even for people wanting to support human writers, it could become difficult to avoid daily consumption of AI generated content.

If the optics are bad for business, use of AI writing might simply go underground, with entertainment studios hiring the opposite of ghost writers to be the public human face of a story.

Something similar is already happening with script analysis. Companies like Cinelytic say they’ve been contracted by major entertainment studios, but are unable to publicly announce much of their clientele. Studio executives don’t want the public to know how much they rely on data analytics for creative processes.

Stay Tuned…

What was once science fiction is now modern life. What that will mean for film remains to be seen. Legislation has famously struggled to keep up with new technology and the challenges presents. Even in their current state LLMs, have numerous implications for creative rights and intellectual property. Who, exactly, is seeing to that?

One thing is clear – what's at stake is too important to leave the decisions in the hands of technology companies. Filmmakers, writers, composers, actors – everyone invested in the future of film should join the conversation.

Film ♥︎ Data is here to bring that conversation to life, and keep it going as our world moves into the unknown.